Spider-Man, Security Questions, and Identity Fraud: A Cybersecurity Story

Ever seen that classic Spider-Man meme where three Spideys are pointing at each other, accusing the others of being impostors? It’s the perfect representation of identity confusion—after all, depending on whom you ask, the “real” Spider-Man could be Tobey Maguire, Andrew Garfield, or Tom Holland.

It all comes down to context and baseline—what you grew up with, what you expect, and what “normal” looks like to you.

The same applies to identity investigations: One moment, users are securely logging into their accounts, and next, someone else is out there pretending to be them, causing chaos. Without the right context and baseline, how do you determine who’s the real one? Suddenly, the user’s identity is caught in a high-stakes game of “who’s who?”—and trust us, it’s a lot less funny when you’re the one stuck in the web.

On the Cato MDR team, we routinely monitor and investigate identity stories like these. Here’s how we approach identity investigations. Follow these steps and you as a security engineer, will have the right information to make that fateful call: Whether to allow that user who claims to be the CIO onto the network – or block him or her.

The Many Faces of Identity Attacks

Identity attacks come in many flavors, and none of them are sweet. Account Takeover (ATO) is a top seller among attackers—once they steal or guess users’ credentials, they waltz into their accounts like they own the place, often locking them out while they commit fraud or spread malware.

Then there’s Identity Theft, where cybercriminals don’t just steal a user’s login—they steal everything about the user, using the user’s personal info to open accounts, apply for loans, or worse. Credential Stuffing is the brute-force equivalent of “spray and pray,” where attackers take leaked username-password combos and try them everywhere, banking on people reusing passwords (spoiler: they do).

And let’s not forget Phishing, the digital art of deception, where attackers trick users into handing over credentials through emails, fake websites, or even well-timed social engineering calls.

Finally, there’s Business Email Compromise (BEC), the corporate cousin of phishing. In BEC attacks, cybercriminals spoof or hijack business email accounts—often those of executives or finance teams—to trick employees into wiring money, sending sensitive data, or approving fraudulent invoices. It’s low-tech but high impact, relying more on trust and timing than technical wizardry.

The common theme? If an attacker can pretend to be a user, they can cause serious damage – unless you make their job harder.

The Thin Line Between True Positives and False Positives – How Do You Decide?

Identity investigations aren’t just about spotting anomalies—they’re about knowing which questions to ask. A single red flag doesn’t always mean danger, and a seemingly normal login can sometimes be the start of an attack. After all, a Spidey wearing a red uniform and another wearing a black uniform might be dressed differently, but they can still be the same Spidey (right, Marvel fans?). So, how do you separate real threats from innocent quirks in user behavior? The key is in the details:

- Where does this user typically connect from? Is this login coming from their usual city, or did they suddenly appear halfway across the world?

- What are the technical fingerprints of this login? A familiar user showing up with an unfamiliar device, OS, or browser might just be using a new laptop—or it might be someone abusing their credentials and hoping no one checks the details

- Does their job role justify this behavior? Sales and field personnel travel constantly, but should your finance team be logging in from multiple countries in one day?

- How do similar users behave? Is this pattern common among their peers, or is it an outlier?

- Is the anomaly tied to a specific app or a broader pattern? A strange login attempt on one application might be an accident, but unusual access across multiple systems could signal something bigger.

- Is this behavior isolated, or are other users exhibiting it too? If multiple employees—especially across different departments—show similar anomalies, it could indicate a larger issue rather than an individual compromise.

- When was this user even created in the platform? If this is their very first login, it could be a legitimate new employee—or a freshly created rogue account flying under the radar.

By asking the right questions, security teams can move beyond surface-level alerts and make confident, informed decisions—minimizing both false positives and real threats slipping through the cracks.

One Source of Truth? Why Settle for Just One?

Relying on any one of answers to these questions, single data point for identity investigations is like trying to solve a mystery with only half the clues. Sure, sign-in events are a great starting point, but stopping there could mean missing the bigger picture. To truly understand whether an activity is suspicious or just an innocent deviation, security teams need to pull in multiple sources of truth.

For example, imagine a user connecting from their usual location, but within minutes, network logs show traffic being routed somewhere unexpected. Maybe the user starts accessing an internal finance tool or the company’s employee management platform—something completely outside the user’s usual workflow. Even more concerning, the user’s email activity suddenly spikes, with sensitive data being sent to external addresses.

Then there are third-party integrations. Many organizations rely on external applications for project management, cloud storage, or financial transactions. If an identity-related anomaly coincides with unusual API activity—like an unauthorized attempt to export company data from a CRM or modify permissions in a cloud platform—it could indicate something much bigger than a simple login issue.

By combining login events, application access, network behavior, email activity, and third-party integrations, security teams can stop treating investigations like guessing games and start making decisions based on a complete, contextualized picture.

Finding Anomalies in a Sea of Data – Simplify with Targeted Visualization

Investigating an identity alert is rarely straightforward (unless you’re just a lucky analyst). You start with a simple question, “Is this login suspicious?”, but quickly find yourself buried in data. As you dig through logs, timelines, and event details, two common patterns emerge:

- Standard Behavior, Unusual Event, Standard Behavior – A user logs in from their usual location, suddenly appears in a different country or device, and then continues as if nothing happened. Was it a VPN? A compromised session? A Teleporter (probably not)?

- Standard Behavior and then something completely different – A user always logs in from New York, but today, the user is in Hungary or Austria with a brand-new device. That’s not just unusual; it’s an immediate red flag.

These patterns should be easy to catch, but when you’re dealing with millions of authentication events, even obvious anomalies get lost in the noise. Searching for specific indicators—like new sign-in locations, unusual OS types, or mismatched user agents—can become overwhelming when spread across multiple raw data logs. Even when you know what you’re looking for, too much data makes the investigation harder, not easier.

This is where simpler visualizations become your hero. Instead of forcing analysts to manually correlate sign-in data across different attributes, a well-structured graph can do the heavy lifting. By overlaying multiple values—sign-in location, source IP, device type, client classification—within the same timeframe, the anomalies don’t just appear; they jump off the screen. A user’s login behavior should follow a predictable pattern, and when it doesn’t, the deviation is instantly visible.

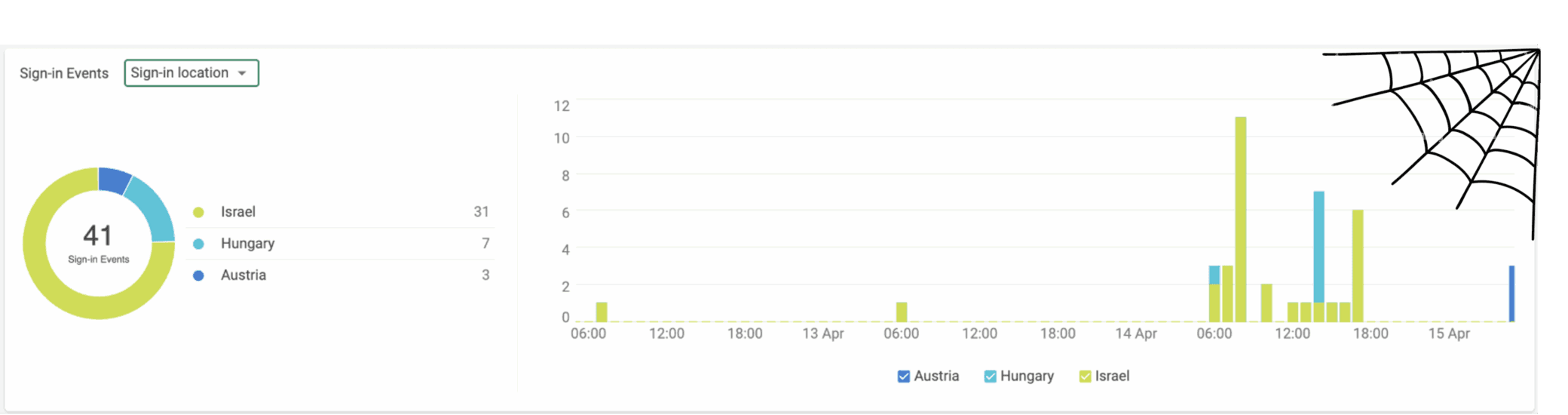

Figure 1. Story distribution by Sign-in location

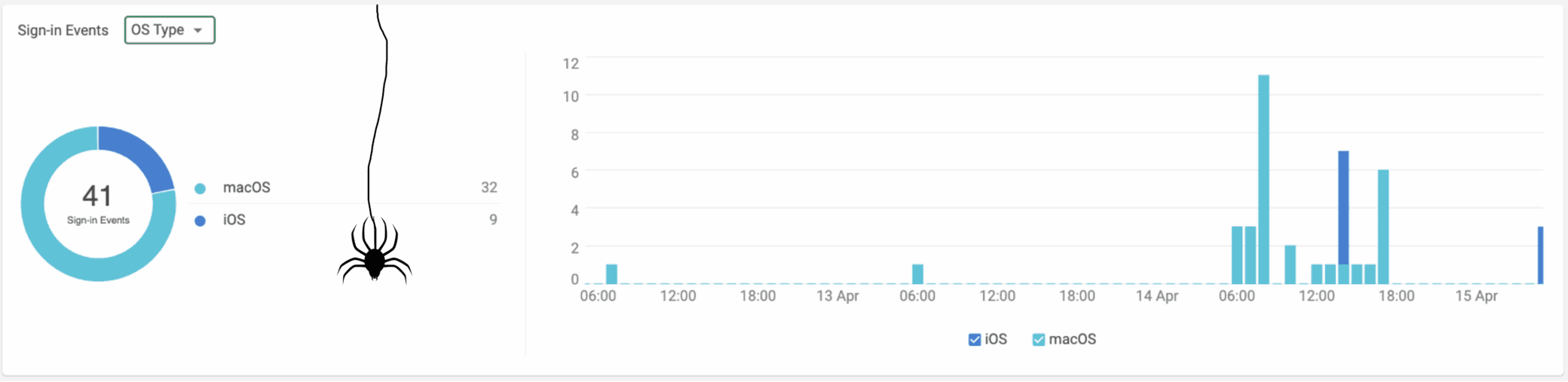

Figure 2. Story distribution by OS Type

This is exactly what we built into Cato XDR. Instead of presenting identity data in a raw, disconnected format, we designed a targeted visualization approach that allows security analysts to immediately spot deviations in user behavior. No more hunting across endless logs—just clear, concise insights that make investigations faster and more effective

These graphs were pulled straight from the Cato XDR —captured just before the Spidey chaos began.

Cato XDR – Industry’s First Converged SASE & XDR Solution | Watch Now

Wrapping Up: From Spidey Memes to Security Mastery

In today’s identity-driven threat landscape, context is everything. Like trying to figure out which Spider-Man is real, understanding the truth behind a user’s behavior means going beyond surface-level alerts. With the right questions, diverse data sources, and clear visualizations, security teams can cut through the noise and uncover real threats faster. Cato XDR empowers analysts with the tools and insights they need to make confident decisions—turning complex identity investigations into a streamlined, effective process. Strong identity security isn’t about guessing—it’s about having the full picture.

Resources:

- Learn more about how the Cato Managed XDR service works here.

- Curious about the solution behind it? Learn more about Cato XDR here.

The post Spider-Man, Security Questions, and Identity Fraud: A Cybersecurity Story appeared first on Cato Networks.