Cato CTRL™ Threat Research: Threat Actors Abuse Simplified AI to Steal Microsoft 365 Credentials

Executive Summary

AI marketing platforms have exploded in popularity, becoming everyday tools for creative teams in enterprises worldwide. Platforms like Simplified AI offer marketers the ability to generate content, clips, and campaigns at scale. For CISOs and IT leaders, approving such services often seems straightforward: allow access, whitelist the domain, and enable the marketing team to innovate. But what if the very same platform, whether already used by your employees or not, is leveraged by threat actors to steal from you?

That’s exactly what our Cato MDR service uncovered. In July 2025, we observed a phishing campaign that targeted US-based organizations—with one US-based investment organization falling victim to the attack. However, the attack was quickly detected and contained before further compromise occurred. The campaign is no longer active.

During the phishing campaign, threat actors hosted a phishing webpage under the legitimate Simplified AI domain, blending malicious activity into the daily noise of enterprise traffic. By impersonating an executive from a global pharmaceutical distributor, the threat actors delivered a password-protected PDF that appeared legitimate. Once opened, the file redirected the victim to Simplified AI’s website, but instead of generating content, the site became a launchpad to a fake Microsoft 365 login portal designed to harvest enterprise credentials.

This social engineering combined with phishing highlights a dangerous evolution: threat actors are merging impersonation with sophisticated phishing techniques while exploiting the era of AI adoption in enterprise organizations. They are no longer relying on suspicious servers or cheap lookalike domains. Instead, they abuse the reputation and infrastructure of trusted AI platforms. These are platforms your employees already rely on, or that your security team may implicitly trust, allowing threat actors to bypass defenses and slip into your organization under the cover of legitimacy.

Traditional defenses alone can’t stop social engineering combined with phishing campaigns that abuse trusted AI platforms. The Cato SASE Cloud Platform provides AI-aware visibility and policy enforcement. Combined with the Cato MDR service, which adds expert threat detection and response, customers can avoid and mitigate such risks.

2025 Cato CTRL Threat Report | Download the report

Threat Report | Download the report

Technical Overview

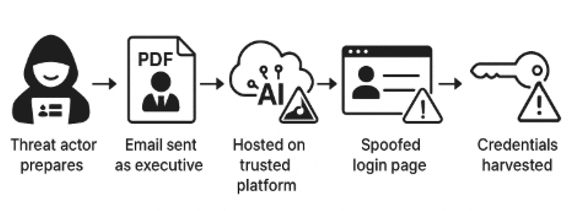

The phishing campaign we investigated began with a suspicious email impersonating an executive from a global pharmaceutical distributor. To boost credibility, the email included the company’s logo and referenced the executive by name, which were details that we later confirmed to be real via LinkedIn. This combination of authentic branding and impersonation was designed to lower suspicion and convince the victim to engage. In Figure 1, we illustrate the flow of this campaign, and in the following section, we elaborate on each step of the campaign.

Figure 1. Attack flow illustrating the stages of the phishing campaign.

Step 1: Impersonation and Delivery

The phishing email arrived with a password-protected PDF attachment. While sending a password-protected file may appear to be a legitimate business practice, in this case it was a deliberate tactic to bypass automated email security scanners, which cannot easily inspect encrypted attachments. The password itself was conveniently included in the email body, ensuring the recipient could open it without friction. The threat actors impersonated an executive from a global pharmaceutical distributor when sending the email.

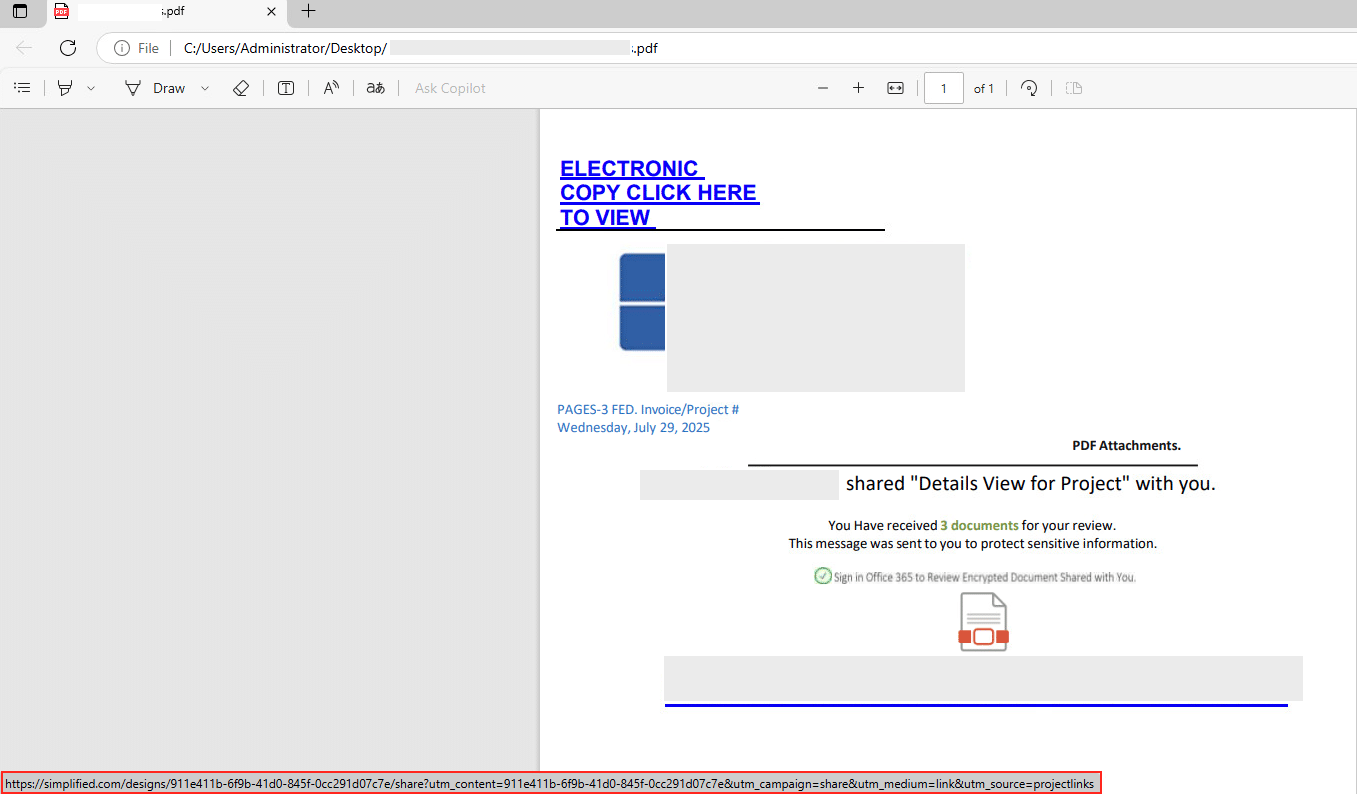

Step 2: The PDF Lure

Upon opening, the document prominently displayed the company logo of the global pharmaceutical distributor, the shared file name, and the impersonated executive’s name. This reinforced the sense of authenticity and increased the likelihood of user interaction. Inside the PDF was a link that directed the user to the Simplified AI platform (Figure 2).

Figure 2. The PDF lure displaying the global pharmaceutical distributor logo (redacted), the impersonated executive’s name (redacted), and a link to Simplified AI platform.

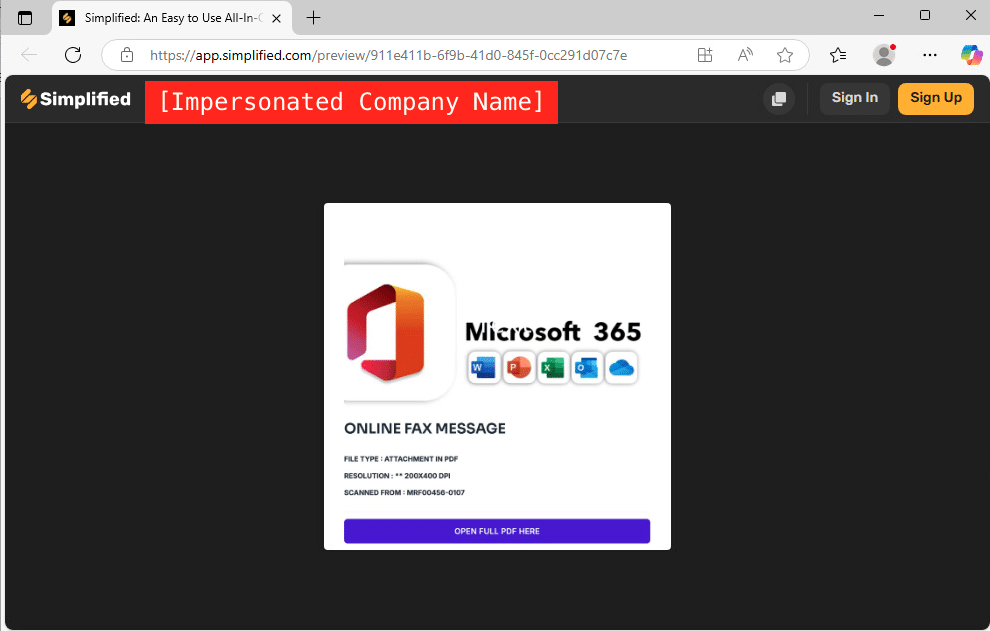

Step 3: Redirect to Simplified AI

Clicking the link led the victim to app.simplified.com. For most organizations, such domains are already whitelisted or implicitly trusted, making this redirection highly effective at avoiding detection. Alongside the Simplified logo, the impersonated company name appeared for the global pharmaceutical distributor, making the page look as real and legitimate as possible. (Figure 3).

Figure 3. The phishing webpage hosted on Simplified AI’s platform, displaying the impersonated company name for the global pharmaceutical distributor (redacted) with Microsoft 365 imagery.

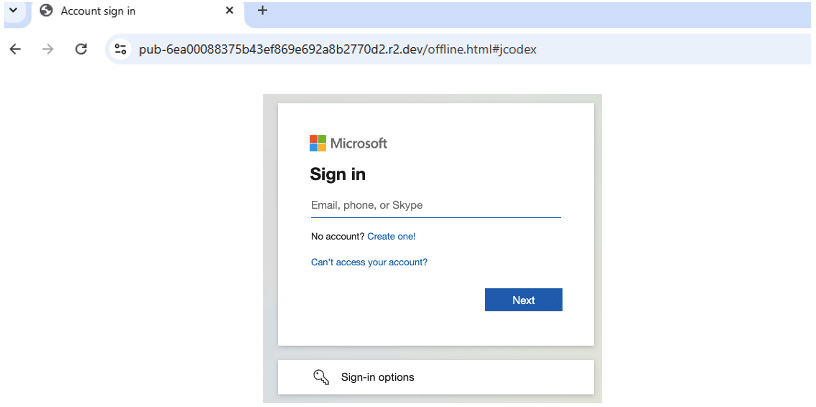

Step 4: Final Phishing Page

From there, the victim was redirected once more, this time to a spoofed Microsoft 365 login portal (Figure 4). The fake page closely mirrored Microsoft’s genuine login screen, even showing the login user a company logo and background—designed solely to harvest enterprise credentials. Any usernames and passwords entered would have been transmitted directly to the threat actors.

Figure 4. The spoofed Microsoft 365 login page.

This refined attack chain shows how the threat actors combined social engineering (executive impersonation and logos) with technical evasion (encrypted PDFs and trusted AI domains, particularly popular AI platforms) to build a phishing campaign capable of bypassing traditional defenses and deceiving enterprise users.

Conclusion

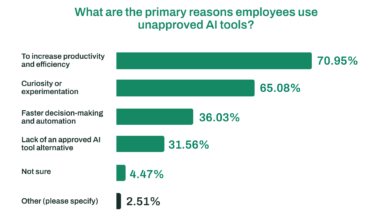

This phishing campaign demonstrates how trusted AI platforms can be weaponized by threat actors to deliver phishing attacks that appear legitimate and bypass traditional defenses. By combining executive impersonation with the abuse of widely used AI services, threat actors created a convincing attack chain capable of deceiving enterprise employees. Although this campaign is no longer active, it reflects a cross-sector threat that can be applied to any industry, highlighting the growing risks of blind trust in AI traffic. As we explored in a previous shadow AI blog, enterprise adoption of AI is accelerating, and with it brings new attack surfaces. Security teams must adapt by treating AI traffic with the same scrutiny as any unknown domain.

Protections

Modern phishing attacks bypass traditional defenses by using password-protected files, trusted AI domains, and executive impersonation identities. Stopping them requires visibility beyond email filters or URL blocklists.

How Cato Networks Protects

With the Cato MDR service, Cato helps enterprises detect and mitigate such threats through:

- Deep traffic inspection: detect suspicious flows even in encrypted/password-protected files.

- Behavioral and reputation analysis: Flag unusual use of trusted platforms.

- Brand impersonation detection: Research and identify spoofed logos and impersonation techniques.

- Cloud-native visibility: Ensure AI traffic is always inspected.

Securing AI Platforms

Attackers exploit trust in AI platforms. Cato addresses this with:

- User visibility and app discovery.

- Policy enforcement and tenant controls.

- AI-aware DLP and monitoring.

- Up-to-date AI catalog enriched by analytics and threat intelligence.

Best Practices for Enterprises

- Monitor AI platform usage, official or shadow.

- Train employees to handle password-protected files carefully.

- Enforce MFA on Microsoft 365 and other critical services.

- Continuously inspect AI traffic instead of implicitly trusting it.

Indicators of Compromise (IOCs)

The following indicators were identified as part of this phishing campaign.

Legitimate AI platform abuse (used as redirect):

http[:]//app.simplified.com/preview/911e411b-6f9b-41d0-845f-0cc291d07c7e

Phishing URL (final credential harvest page):

https[:]//pub-6ea00088375b43ef869e692a8b2770d2.r2[.]dev/assets/php/endpoints/account.php

Associated IP address: 104.18.50.34

The post Cato CTRL™ Threat Research: Threat Actors Abuse Simplified AI to Steal Microsoft 365 Credentials appeared first on Cato Networks.