Cato CTRL™ Threat Research: PoC Attack Targeting Atlassian’s Model Context Protocol (MCP) Introduces New “Living off AI” Risk

Executive Summary

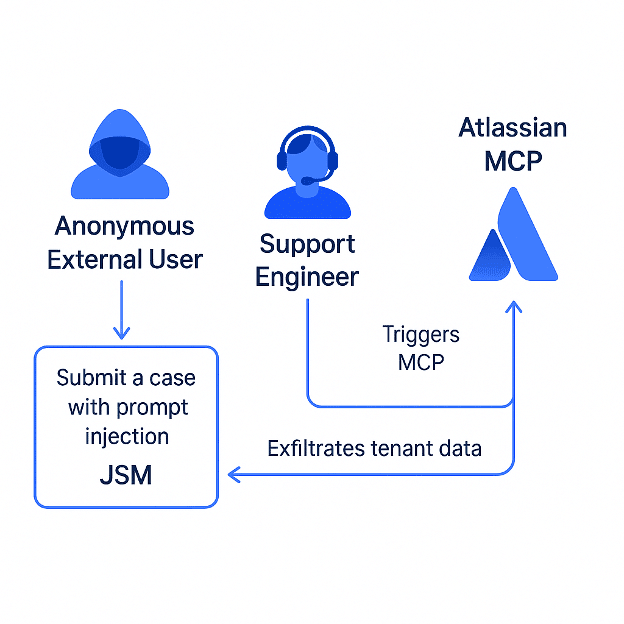

Most organizations assume a clear boundary between external users, who submit support tickets or service requests, and internal users, who handle them using privileged access. However, when an internal user triggers an AI action from a model context protocol (MCP) tool, such as summarizing a ticket, that boundary can break. The AI action is executed with the internal user’s permissions (whether a human agent, a bot, or an automated integration), meaning a malicious ticket submitted by an external threat actor can be used to inject harmful instructions.

Here’s how it works:

- A threat actor (acting as an external user) submits a malicious support ticket.

- An internal user, linked to a tenant, invokes an MCP-connected AI action.

- A prompt injection payload in the malicious support ticket is executed with internal privileges.

- Data is exfiltrated to the threat actor’s ticket or altered within the internal system.

- Without any sandboxing or validation, the threat actor effectively uses the internal user as a proxy, gaining privileged access.

We will demonstrate a proof-of-concept (PoC) attack targeting Atlassian’s MCP and Jira Service Management (JSM). We refer to this as a “Living off AI” attack. Any environment where AI executes untrusted input without prompt isolation or context control is exposed to this risk.

Cato’s customers can define security rules to inspect and control AI tool usage across an enterprise environment with GenAI security controls from Cato CASB.

Technical Overview

Background: Atlassian’s MCP Integration with Jira Service Management

Atlassian recently announced its MCP server, which embeds AI into enterprise workflows. Their MCP enables a range of AI-driven actions, such as ticket summarization, auto-replies, classification, and smart recommendations across JSM and Confluence. It allows support engineers and internal users to interact with AI directly from their native interfaces. Many enterprises are likely adopting similar architectures, where MCP servers connect external-facing systems with internal AI logic to improve workflow efficiency and automation. However, this design pattern introduces a new class of risk (“Living off AI”) that must be carefully considered.

Demonstration: Prompt Injection via Jira Service Management

In our PoC demo (illustrated in Figure 1 and the video below), we show how a threat actor, connected via JSM, could achieve the following:

- Trigger an Atlassian MCP interaction by submitting a malicious support ticket, which is later processed by a support engineer using MCP tools like Claude Sonnet, automatically activating the attack flow.

- Induce the support engineer to unknowingly execute the prompt injection payload through the Atlassian MCP.

- Gain access to internal tenant data from JSM that should never be visible to the threat actor.

- Exfiltrate internal data from a tenant that the support engineer is connected to, simply by having the extracted data written back into the ticket itself.

Figure 1. Prompt injection via Jira Service Management

Additionally, using a Google search query like “site:atlassian.net/servicedesk inurl:portal” (which reveals companies using Atlassian service portals), we observed that many potential service portals could be targeted by threat actors.

Notably, in this PoC demo, the threat actor never accessed the Atlassian MCP directly. Instead, the support engineer acted as a proxy, unknowingly executing malicious instructions through Atlassian MCP.

Watch the full video demo here

Additional Scenario: Leveraging Partner Portals for Command-and-Control and Lateral Movement

Let’s say Company A works with a partner via JSM, and that partner has scoped access to submit enhancement requests. Now imagine the partner’s email account is compromised. A threat actor can use it to:

- Submit an enhancement request ticket containing a crafted MCP prompt that silently adds a comment to all open Jira issues. The comment reads, “Company A Confluence Page maintenance update,” and includes a link to a fake Confluence page controlled by the threat actor. The page is designed to look like an internal research and development (R&D) document but contains no meaningful content. Most users, such as quality assurance (QA) engineers, would likely click the link, dismiss it, and move on to their backlog of daily tasks.

- After some time, a product manager responds to the enhancement request using an MCP auto-response template, unknowingly triggering the injected prompt.

- The next day, a QA engineer clicks the Jira link added to one of their issues and opens the fake Confluence page. Seeing nothing of value, they close it without further action.

- In the background, a connection to the command-and-control (C2) server is established. Malware is downloaded to the QA engineer’s machine, user credentials are extracted, and the threat actor begins lateral movement within the organization. From there, it is just the beginning—data exfiltration, destruction, encryption, and other malicious actions are all possible.

At no point does the attacker directly access Company A’s backend during the initial access phase. The MCP prompt handled everything.

2025 Cato CTRL Threat Report | Download the report

Threat Report | Download the report

Conclusion

The risk we demonstrated is not about a specific vendor—it’s about a pattern. When external input flows are left unchecked with MCP, threat actors can abuse that path to gain privileged access without ever authenticating.

We refer to this as a “Living off AI” attack. Any environment where AI executes untrusted input without prompt isolation or context control is exposed to this risk.

As AI becomes part of critical workflows, this design flaw must be recognized and addressed as a critical security issue.

Protections

Customers can define security rules to inspect and control AI tool usage across an enterprise environment with GenAI security controls from Cato CASB.

For example, creating a rule to block or alert on any remote MCP tool calls like create, add, or edit. This helps you:

- Enforce least privilege on AI-driven actions.

- Detect suspicious prompt usage in real time.

- Maintain audit logs of MCP activity across the network.

The post Cato CTRL™ Threat Research: PoC Attack Targeting Atlassian’s Model Context Protocol (MCP) Introduces New “Living off AI” Risk appeared first on Cato Networks.